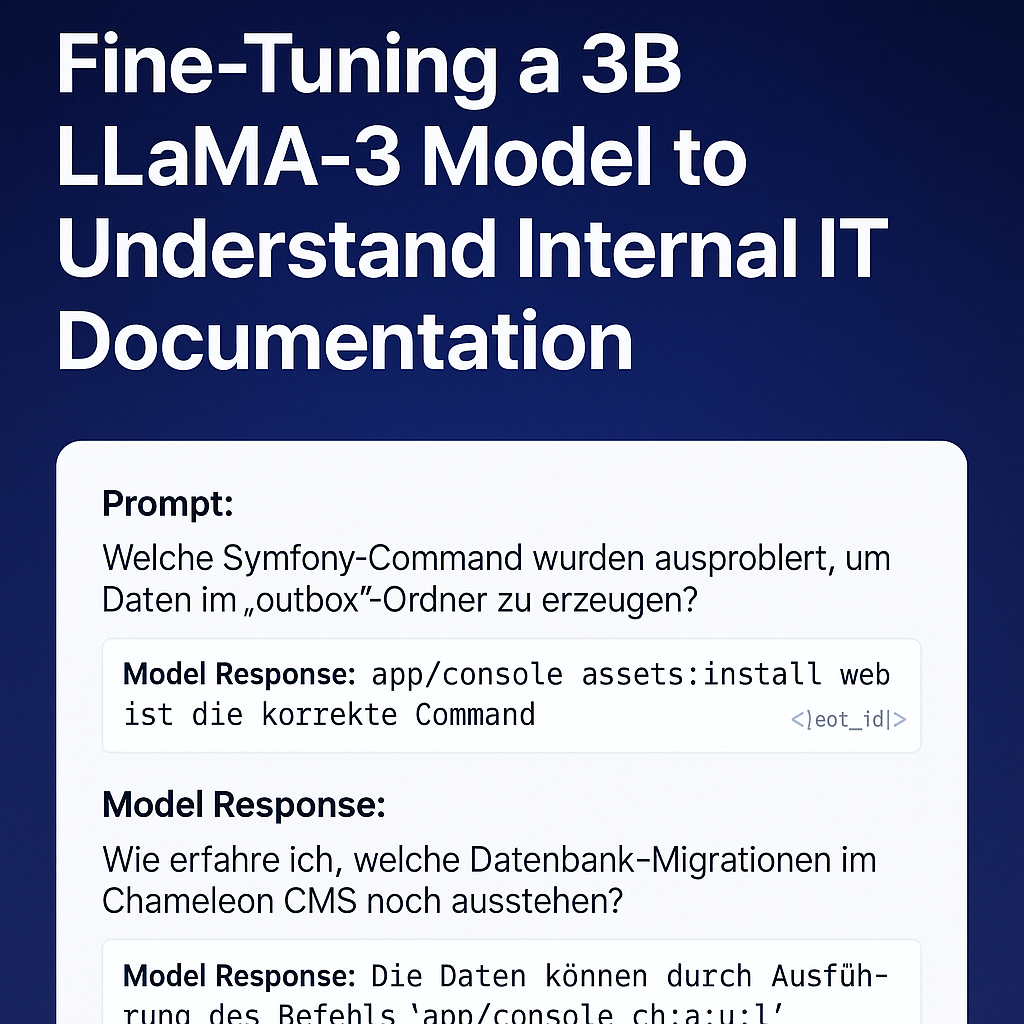

After several trials and experiments, I’ve finally completed my first successful fine-tuning of a LLaMA-based model using my own real-world internal IT documentation. This project fine-tunes a 3B parameter model to understand and respond to Symfony command usage, Chameleon CMS workflows, and developer issues like rsync errors or Xdebug debugging failures.

The result is now publicly available on Hugging Face: kzorluoglu/chameleon-helper

Setup Overview

I used the excellent Unsloth Synthetic Data Kit and trained the model on a curated dataset of 32 instruction-based Q&A pairs, written in natural German developer language.

Instead of the usual instruction/input/output JSON format, I opted for a more human-friendly wiki-style documentation format to keep the prompt more intuitive and domain-specific.

Training Pipeline

The model used was unsloth/Llama-3.2-3B-Instruct, fine-tuned via LoRA on Google Colab using:

- 60 training steps

- 4-bit quantization

- 2048 token context window

- TRL’s

SFTTrainer(Supervised Fine-Tuning Trainer)

Only ~1–10% of parameters were updated thanks to LoRA, making the process lightweight and efficient.

Inference Results

I ran a couple of test prompts using the trained model:

Example 1

Prompt:Welche Symfony-Command wurden ausprobiert, um Daten im 'outbox'-Ordner zu erzeugen?

Model Response:app/console assets:install web ist die korrekte Command<|eot_id|>

Perfect result! Matches my internal documentation exactly.

Prompt:Wie erfahre ich, welche Datenbank-Migrationen im Chameleon CMS noch ausstehen?

Model Response:

Die Daten können durch Ausführung des Befehrs `app/console ch:a:u:l` ermittelt werden.<|eot_id|>

Slight typo (“app/console ch:a:u:l” instead of “app/console ch:u:l”), but the core answer was semantically correct.

Lessons Learned

- This successful fine-tuning came after several iterations refining the dataset and formatting.

- With just 32 well-written examples, the model already shows strong understanding of custom internal logic.

- A few spelling issues remain, but I expect these to decrease significantly with a larger, higher-quality dataset (e.g., 200–500 examples).

Model on Hugging Face

You can try the fine-tuned model directly here:

kzorluoglu/chameleon-helper on Hugging Face

Views: 104