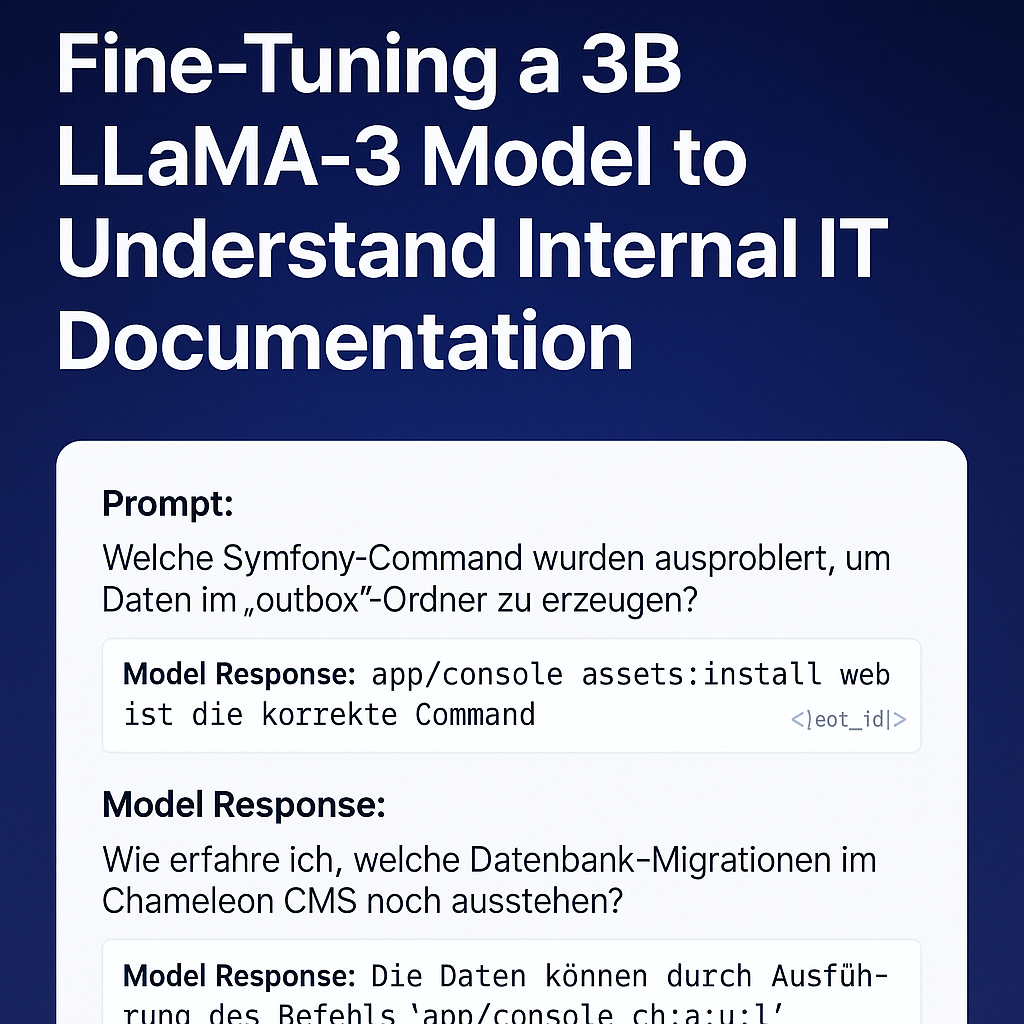

After several trials and experiments, I’ve finally completed my first successful fine-tuning of a LLaMA-based model using my own real-world internal IT documentation. This project fine-tunes a 3B parameter model to understand and respond to Symfony command usage, Chameleon CMS workflows, and developer issues like rsync errors or Xdebug debugging failures. The result is now publicly available on Hugging Face: kzorluoglu/chameleon-helper Setup Overview I used the excellent Unsloth Synthetic Data Kit and trained the model on a curated dataset of 32 instruction-based Q&A pairs, written in natural German developer language. Instead of the usual instruction/input/output JSON format, I opted for […]

Searching in

Enter search term to find items

to navigate, to select, and to close