Usage: Here is a simple script to achieve this: Here are the steps to make your create-db.sh script globally accessible: Optional: Rename the Script for Easier Access: If you want, you can rename the script to just create-db for ease of use: Views: 21

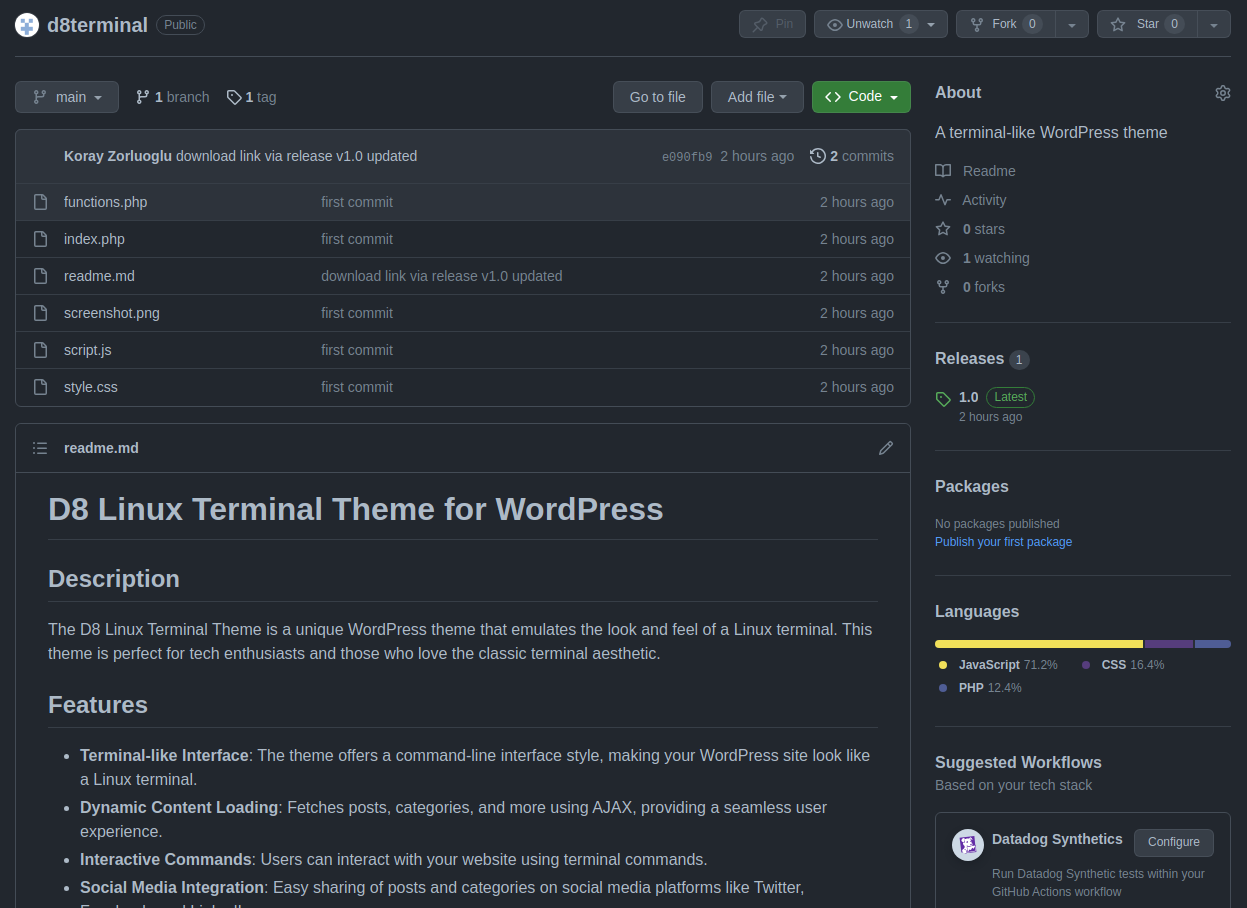

D8 Linux Terminal Theme on GitHub

The D8 Linux Terminal Theme is not just another WordPress theme. It’s a passion project, born out of my love for the classic Linux terminal interface. I’ve meticulously designed this theme to mimic the look and feel of a terminal, providing an immersive experience for users and visitors alike. To install the D8 Linux Terminal Theme, follow these steps: Views: 27

Unveiling the Unique Essence of Our D8 Linux Terminal Theme

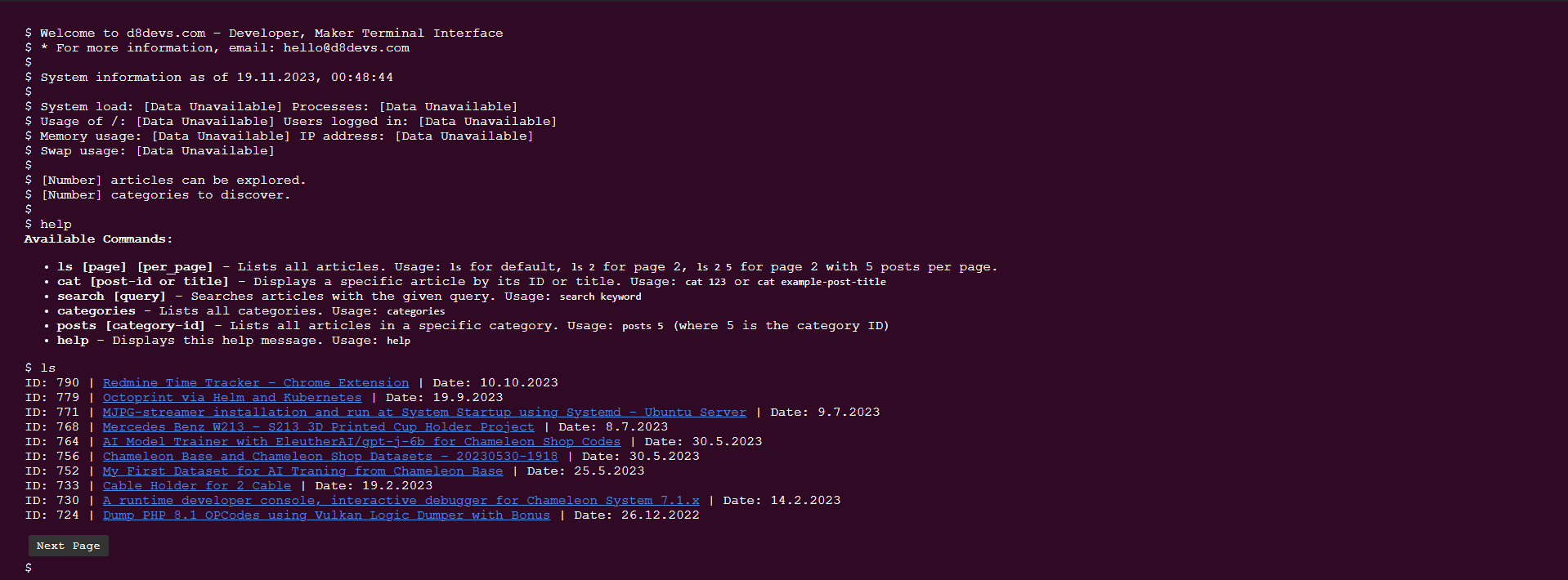

Hello, fellow developers and enthusiasts! Today, I want to share the story behind our D8 Linux Terminal Theme, a project that’s close to our hearts. Recently, we attempted to upload this theme to the WordPress theme directory but faced some unexpected challenges. The review team had concerns, primarily around understanding the theme’s purpose and its unconventional design approach. Why This Theme? Our D8 Linux Terminal Theme is not your typical WordPress theme. It’s designed for those who love the simplicity and nostalgia of the Linux terminal. We wanted to break away from the conventional design norms of WordPress themes – […]

Introducing the D8 Linux Terminal Theme: A Unique WordPress Experience

Hello, WordPress enthusiasts and developers! Today, I’m thrilled to introduce a new addition to the WordPress theme repository – the D8 Linux Terminal Theme. This theme is designed for those who love the simplicity and efficiency of a Linux terminal and want to bring that experience to their WordPress site. What is the D8 Linux Terminal Theme? The D8 Linux Terminal Theme is a WordPress theme that transforms your website’s interface into a Linux terminal-like environment. It’s perfect for developers, tech enthusiasts, or anyone who wants a unique and minimalist design for their website. This theme is not just about […]

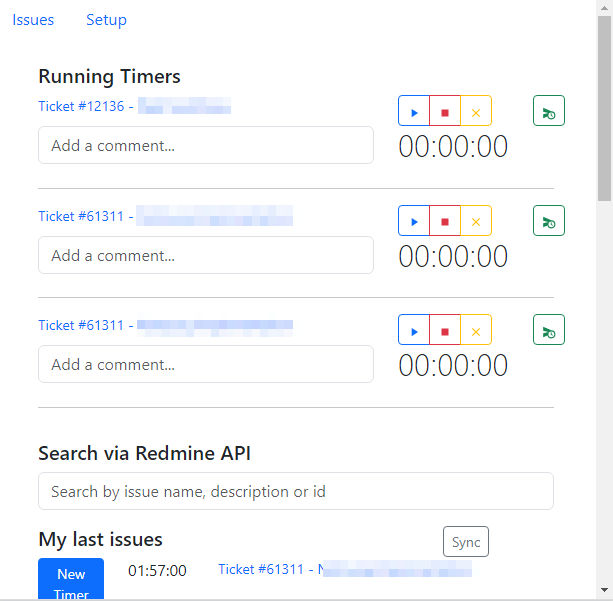

Redmine Time Tracker – Chrome Extension

A Chrome Extension with Vue.js to efficiently track time for Redmine tickets. #Setup Page # Issues Page # Search Results Features Steps to Use the Extension https://github.com/kzorluoglu/kedmine-chrome#steps-to-use-the-extension New Feature – 16.10.2023 New Feature – 16.10.2023 CSV to Redmine Table Converter Views: 17